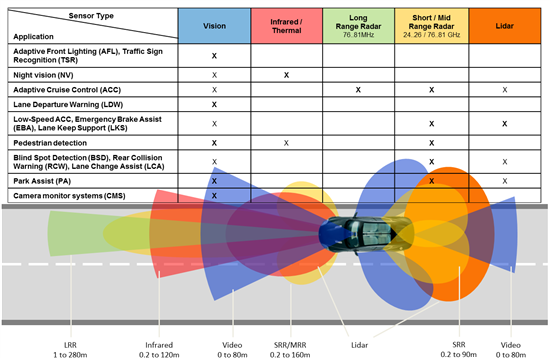

With the advent of Advanced Driver Assist Systems (ADAS) systems and the drive towards autonomous vehicles, automobiles need to be aware of their surroundings. Drivers are able to sense our surroundings, make judgments and react quickly to a variety of conditions. However, we are not perfect. We get tired, distracted and make mistakes. In order to improve safety, automobile manufacturers are designing ADAS into cars. Automobiles rely on a variety of sensors for awareness of their surroundings under a multitude of different conditions. This data is then passed on to highly sophisticated processors such as TI’s TDA2x, to be used for functions such as Automatic Emergency Braking (AEB), Lane Departure Warning (LDW) and Blind Spot Detection.

There are several types of sensors commonly used for awareness. Passive sensors – Used to sense radiation reflected or emitted from objects.

- Visible image sensors – all imagers operating in the visual spectrum

- Infra-red image sensor – operate outside of the visual spectrum. These can be NIR or Thermal (Far IR)

Passive sensors are affected by the environment – time of day, weather etc. Eg. Visible sensors are affected by the amount of visible light available at different times of the day.

Active sensors – emit radiation and measure the response of reflected signals. Advantages are the ability to obtain measurements anytime, regardless of the time of day or season.

- Radar- emits radio waves that bounce off an object to determine it’s range, direction, and speed

- Ultrasonic- emits ultrasonic sound waves that bounce off an object to determine it’s range

- Lidar- scans an infrared laser that bounces off an object to determine the range

- Time of Flight- a camera that measures the time it takes for an emitted infrared light beam to bounce off an object and return to the sensor to determine it’s range

- Structured Light- a known light pattern is projected onto an object, usually by a TI DLP. The deformation of this pattern is captured by a camera and analyzed to determine distance

To provide the increased accuracy, reliability and robustness under a wide variety of conditions, more than one type of sensor are often needed to be used to view the same scene. All sensor technologies have their inherent limitations and advantages. Different sensor technologies can be combined to provide a more robust solution by fusing the data from different sensors looking at the same scene, “Fusion eliminates confusion”. One example, is the combination of visible sensors and radar.

Visible sensors advantages include high resolution, ability to identify and classify objects, as well as providing vital intelligence. However their performance is affected by amount of available light and weather conditions (such as fog, rain and snow). Additional factors such as heat result in image degradation due to noise. Sophisticated image processing available on TI processors can mitigate some of this.

Radar, on the other hand, can see through fog rain or snow, and can measure distance very quickly and effectively. Doppler radar has the added advantage of being able to detect the motion of objects. However, radar is lower resolution and cannot easily identify objects. The fusion of visible and radar data provides a solution that is much more robust under a wide variety of conditions.

Also, the cost varies between different sensors, which influence the best choice for a particular application. For example, laser radar (LIDAR) provides very accurate distance measurement but is more expensive than a passive image sensor. As development continues, costs will decrease and eventually cars will rely on a whole variety of sensors to become aware of their environment.

The TDA family of processors are highly integrated and developed on a programmable platform that meets the intense processing needs of ADAS-enabled automobiles. Data from different sensors looking at the scene can be fed into the TDA2x and combined into a more complete picture that allows quick and intelligent decision making. For example, a visual sensor may show a mailbox that looks like a human in dusk conditions. The TI processor can perform sophisticated pedestrian detection that, based on the proportions of the object, may identify it as a possible human by the side of the road. However, data from a thermal sensor would identify the object as too cold to be alive and therefore probably not a human. Therefore, different sensors operating at different modalities provide an added level of safety.

The ultimate goal is creating completely autonomous cars that drive themselves with the ultimate goal of a world without traffic fatalities. TI is actively working on sensor and processing technologies to aid customers in developing the autonomous vehicle. In time, however, these problems shall be solved and I am confident that when it comes to autonomous cars, the question is not “if” we shall get there but “when.”

No comments:

Post a Comment